I once heard a high-level individual at an org ask:

When will we have zero CVEs?

I laughed at the concept. Our processes were immature. Industry tooling was immature. I was immature. I don't laugh nearly as hard at the question anymore. Before I can get to my main point of "Zero CVEs is more possible now than it ever has been", I need to get through some background.

While researching Docker base images to use, I have run across a sentiment that is concerning. People out there either question the need to perform CVE scanning or seem to think that the desire to minimize CVE count is a misguided venture or too difficult.1 , ^{,} ,2 , ^{,} ,3

I believe this is due to two main things:

- lack of need in one's current situation

- inability to parse and address the found issues due to volume or competing priorities

I've been wanting to write something about this for some time, now, as I've seen the value of performing CVE scanning at multiple organizations. I'm not going to attempt to convince you to implement CVE scanning right now. Actively putting it on the back burner in favor of more pressing issues is a strategic choice. Almost nothing in security is gospel, and much is a best-effort exercise in utilizing the time and budget that you're given.

What is a CVE?¶

Common Vulnerabilities and Exposures is:

... [a] system [that] provides a reference method for publicly known information-security vulnerabilities and exposures.4

CVEs describe "problems" in the software we use. An example of a popular CVE is Log4Shell (CVE-2021-4428 - as well as others)5.

Why Scan for CVEs?¶

The 2024 Verizon Data Breach Investigations Report calls out:

a critical increase in vulnerability exploitations, highlighting the need for urgent, strategic vulnerability management.6

Additionally, as of OWASP's 2021 Top 10 release, A06:2021-Vulnerable and Outdated Components is rated number six on the list of vulnerability categories.7 That's a bump of three positions from the last official installment of OWASP's Top 10 in 2017.

The problem is not getting better; it's getting worse. It was ranked number two8 in the community survey, but the data and rubric OWASP uses ranked it as number six.

You likely are somebody or know somebody who had a lot of work to do the day Log4Shell went public. One of my previous orgs had a relatively easy go of it due to their regular CVE scanning and streamlined build and deployment systems. As of 2023, orgs were still finding Log4Shell in their environment9. I doubt that has changed. Why the vulnerability persists is sure to have numerous causes, but you can bet one of them is the lack of CVE scanning and scrutinization of the results.

There are two primary reasons you should scan for CVEs in your environment:

- CVEs tell you what doors to your systems are possibly left WIDE open.

- Regulatory frameworks, certifications, and internal policy often require that CVE scanning be performed and have findings remediated within a defined timeframe.

Doors to Your Environment are Possibly Left WIDE Open¶

If you're just starting out on your journey to scan your assets, you'll likely come out the other side with a huge pile of "What the hell do I do with this?"

In every job interview, I will bring up the concept of "you can't secure what you don't know you have". It's a bit of a broad concept that covers everything from cloud resource scanning to asset inventories. CVE scanning is the next step up from knowing what you have. Once you know what you have, you can start doing things with the info and the assets themselves.

CVE scanning is a meta inventory of the assets. Scanners output the known vulnerabilities in the software you are using. They can also often tell you, among other things:

- whether there are fixes released for the software version you are using

- whether there are known exploits in the wild

A CVE scan of your environment is a kind of heat map you can use to start to hone in on pain points. Maybe your environment:

- is using older machine images than you thought

- results in vulnerable system packages

- is never updating Docker image sources

- results in vulnerable system packages

- is not updating product dependencies (e.g., Python PyPI, Ruby Gems, etc.)

CVE scanning can surface all of the above problems and more. Now that you know where the hot spots are, you can gain a picture of where your processes lacking.

Regulatory Frameworks, Certifications, and Internal Policy Requirements¶

This is the driver that seems to result in the most controversy. It's common for people outside of infosec or audit to not fully understand or agree with this reason. I get it. It doesn't feel great to have to do a thing to "check a box". We infosec people are right there with you. We don't like just checking a box for the sake of checking a box either. We want our time to be well spent. Requirements to scan for and remediate CVEs in your environment aren't mere box-checking. The OWASP Top 10 lists back this up. So does the 2024 Verizon Data Breach Investigations Report.

Most10 cybersecurity and IT employees work at a for-profit entity. These are organizations whose primary goal is, usually, to make money. They have customers. Depending on the industry the org in question is a part of and who their customers are, the questions they get about their security program will be more or less sophisticated. In the world of cloud services, the lowest level of "thing" that security-conscious customers typically want to see is a SOC 2 Type II certification. A SOC 2 Type II not only audits whether an organization's security controls are designed properly but also determines whether the controls are functioning as intended.11 It's a report you can hand to your customers and say "Here are our security controls and the proof that we adhere to them." Regular and successful SOC 2 Type II reports will help make your customers and prospective customers happy. Happy customers likely means more money.

Sometimes you work in an industry where you have to do a thing, and there may be no way around it. Take getting a FedRAMP Authorization to Operate (ATO), for example. Control RA-5 mandates that you regularly scan, remediate, and report on vulnerabilities in your environment.12 There is no realistic way around it. You have to do it if you want to maintain your ATO. Broadly, you have to maintain an ATO if you want to sell SaaS products to Federal Government agencies. Federal agencies can be big spenders.

"Are you going somewhere with this?"¶

Yes.

I've hopefully given a taste of why CVE scanning is important. At a minimum, even if you don't agree that it is, I hope you can see why other people do.

Let's shift focus from why to how.

Dream Scenario13¶

Let's say your org does the following broad things:

- regularly and automatically rebuilds, releases, and consumes machine images14

- including running system package updates

- chooses minimalist host OSs for running containers (e.g., Bottlerocket, Flatcar)

- regularly and automatically rebuilds, releases, and consumes internal Docker images

- including running system package updates

- chooses minimalist base images for Docker images (e.g.,

scratch,busybox,alpine,chainguard) - regularly and automatically rebuilds, releases, and consumes internally-built packages

- think: rebuilding your PyPI package that other internal services use

- it likely has external dependencies

- those dependencies end up being transitive dependencies for services in your organization

- those transitive dependencies have dependencies of their own

- it's dependencies all the way down15

- think: rebuilding your PyPI package that other internal services use

- has a process in place for bumping version numbers of internally-built packages' dependencies

- this would trigger a new build that would subsequently trigger all the other build, release, and consumption flows

- if you don't care about reproducible builds, you can simply unpin dependency versions16

If all that is happening at your org, your monthly workload around machine image, Docker image, and product dependency vulnerabilities is going to be much, much lower than an org not doing these things. I've helped implement almost all of the above at more than one employer. I've seen it work. I've seen the recurring workloads surrounding vulnerability management as it relates to CVEs decrease.

The catch is that the larger and more complicated your environment, the harder and more expensive it's going to be to implement.

Doing all of the above isn't simply plug-and-play. If your SDLC has weak testing across the board, all the automatic happenings are likely to make you queasy. You won't know, with any confidence, whether the things flying through the build process are not going to break anything. All that testing infrastructure takes time and money to implement. It's often difficult to argue for when revenue-generating features are highly focused on within orgs. I don't think it's fair to exclude comprehensive testing from the revenue-generating bucket. Comprehensive testing cuts across multiple parts of an org to essentially do one thing: guarantee, to the best of the org's ability, that a release of a thing is working as it is intended to. If it is, it's unlikely that there will be downtime and lost revenue as a result of a change.

Nearly everything can be traced back to insufficient testing. I've been avoiding calling out names of orgs, here, but Workiva17 didn't have the greatest test suites when I started. By the time I left, it had incredibly advanced testing in place that allowed it to release with confidence for nearly any change. Log4Shell was a minor speed bump for them. When we were doing our FedRAMP ATO, I converted over 150 Docker images from a smattering of older base OSs to better things (at the time): amazonlinux:2, scratch, busybox. Comprehensive testing allowed me to make changes without much thought as to what the service itself was doing. The testing as part of the build for most things would let me know whether what I did broke the service. It was incredibly empowering. I'd argue that investing in testing is one of the best things the company ever did.

How Is It Possible to Regularly Have Zero CVEs?¶

I want to redefine 0.18 I'll acknowledge that I don't think it's truly possible to have zero CVEs on every CVE scan you ever do everywhere in your environment.19 Timing of the scans themselves will always20 be a problem. Maybe you were unlucky, and, shortly after the newest rebuild for a thing, a CVE comes out and affects every AMI you have. It's less about the scan having no items on it and more about utilizing CVE scans to confirm that all your mature processes are working as intended.

Let's say a CVE pops up in the idyllic environment that I laid out above. Its severity is Critical. You analyze it and determine it isn't an immediate threat to your organization. The only thing requiring you to fix it is that you have a policy on the books (that you are SOC 2 Type II audited to) that says you will remediate Critical CVEs within thirty days. You don't have to do a damn thing. You sit back - confident that all your automation will take care of it. This isn't fantasy. It exists. I've helped build it.

Let's alter that scenario a bit. You find that the CVE isn't going away when you would expect it to. Now you have something to investigate:

- Is there some process of yours that didn't run?

- Is the distro or dependency maintainer slow to fix or electing not to fix the vuln?

- some other rage-inducing thing!?

The reasons a CVE doesn't drop out of your environment are many, but now you can confidently say you are doing your best to track them and determine whether it is a problem with your processes (which you'd fix) or whether it's some other thing you may not have control over (which you may want to implement a compensating control for).

Where I Believe Nearly-Zero CVEs Can Be Achieved¶

Language Dependencies

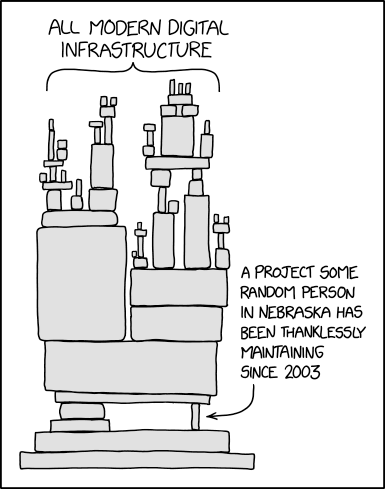

I don't include language dependencies because they are heavily impacted by who is maintaining a given dependency - which is anybody from a major multinational firm with a gazillion dollars21 to the classic XKCD comic, Dependency, that pins all the hopes of the modern digital age on one person in Nebraska.22

I think we're at a point where orgs can get as close to zero CVEs as possible on:

- machine images

- Docker images

- specifically, system packages

Docker Containers¶

I mentioned the following toward the beginning of this post concerning what drives sentiment around CVE smashing being a time-wasting endeavor:

inability to parse and address the found issues due to volume or competing priorities

Many tech stacks have consolidated much of their running product around Docker containers. They provide numerous security benefits, but I've complained plenty about how they have many of the same problems that language dependencies do. Primarily, earnest stewardship of images is a huge spectrum.23

If you're using :latest versions of major-distro-produced images, you'll probably be fine24 in smaller environments or ones that aren't heavily scrutinized. It starts to be a vuln management mess, though, when you scale up in highly-audited and highly-regulated environments. The speed with which CVEs are addressed becomes an overhead problem for investigation and reporting in your vuln management workflows. What can you do? Combine my "dream scenario" above with intentionally choosing minimalist and intentional base images like scratch, busybox, alpine, and chainguard. We liberally utilized scratch, busybox, and alpine at Workiva. A fair amount of Go was used, so the aforementioned images worked well.25

If I had my way from the ground up, I'd push something like Chainguard as hard as I could. I promise you this isn't an advertisement. I can't even describe how much easier something like Chainguard Images would have made attaining a FedRAMP ATO. They:

- are producing minimalist images26

- rebuild their images daily27

- have language- and product-specific images28

- have FIPS-flavors for many of their images29

- build each system package from source30

- provide

:latestandlatest-devversions for free31

But, most importantly, 0 CVEs and SDLC security are their stated goals. This wasn't realistically possible just three years ago. It's, hopefully, on the way to becoming the norm.

"But My Organization Can't Do All of This!"¶

That's okay. I'm not here to force an entire way of operating onto your situation. If all the automatic processes and system choices I've described won't work at your org, modern tooling is catching up to help you in a way that doesn't require large process changes. Many tools have built out - or are building out - reachability analysis with respect to CVEs. One of the common complaints about CVE scanning is all the noise - typically, finding CVEs that don't apply for one reason or another. A common reason for this is the version of a piece of software you are using has a CVE in it, but it is in a part of the code that your usage doesn't touch. We, as an industry, are starting to be able to thin huge piles of stuff coming out of CVE scans by filtering on this concept. It doesn't mean that the vulnerability itself isn't there32, but you can now cut entire chunks of CVE results out and prioritize them differently. Hell... you could write your policies so that you only address reachable CVEs. If one wasn't reachable before, but becomes reachable, flow it through your vuln management processes.

Here are a few tools off the top of my head that do advanced CVE reachability analysis:

The downside to reachability analysis is that you can't do it with CVE scanning alone. More data is needed to augment the CVE findings. Depending on which tool you're looking at, you may get down to function-call-level analysis as opposed to higher-level analysis like what Lacework does with their active file system watching; they can tell you whether a package is used, but not a given function call. Every little bit of filtering helps, and you should be using tools like these as a sieve.

Wrapping It Up¶

How much of what I've discussed you can adopt comes down to the classic security question:

What should we be doing right now?

The answer is different for every org and is driven by many different factors. Pushing for processes and product selection that will minimize your CVE count and help you get closer to the zero-CVE mentality will only strengthen your security posture. Remember, security - like ogres33 - is like an onion: layers are what will save you.

The CVE program isn't without its valid critiques34. Controversial CVEs make it through. Scanning a previously unattended environment can yield overwhelming results. That doesn't mean the entire concept is garbage. Coupled with modern tooling, CVE scanning is more useful than it's ever been.

Footnotes

-

This whole zero CVE fetish is nonsense. (2022, April 1). Reddit. Retrieved July 30, 2024, from https://old.reddit.com/r/linuxadmin/comments/ttbbz8/chainguard_its_all_about_that_base_image/i2ybtft/ ↩

-

Explain to me how Chainguard helps with this. Everywhere... | Hacker News. (2024, March 14). Hacker News. Retrieved July 30, 2024, from https://news.ycombinator.com/item?id=39705014 ↩

-

I have plenty of experience with dealing with any member of a company that thinks CVE scanning is a waste of time and money. ↩

-

Wikipedia contributors. (2024, June 17). Common vulnerabilities and exposures. Wikipedia. Retrieved July 30, 2024, from https://en.wikipedia.org/wiki/Common_Vulnerabilities_and_Exposures ↩

-

Wikipedia contributors. (2024b, July 9). Log4Shell. Wikipedia. Retrieved July 30, 2024, from https://en.wikipedia.org/wiki/Log4Shell ↩

-

French, L. (2024, May 1). Verizon’s 2024 Data Breach Investigations Report: 5 key takeaways. SC Media. https://www.scmagazine.com/news/verizons-2024-data-breach-investigations-report-5-key-takeaways ↩

-

OWASP Top 10:2021. (n.d.). Retrieved July 30, 2024, from https://owasp.org/Top10/ ↩

-

A06 Vulnerable and Outdated Components - OWASP Top 10:2021. (n.d.). Retrieved July 30, 2024, from https://owasp.org/Top10/A06_2021-Vulnerable_and_Outdated_Components/ ↩

-

Van Klinken, E. (2023, May 11). Log4Shell in 2023: big impact still reverberates. Techzine Europe. Retrieved July 30, 2024, from https://www.techzine.eu/blogs/security/105864/log4shell-in-2023-big-impact-still-reverberates/ ↩

-

ROMANOSKY, S., SCHWINDT, K., & JOHNSON, R. (2023). Comparison of Public and Private Sector Cybersecurity and IT Workforces. RAND CORPORATION. Retrieved July 30, 2024, from https://www.rand.org/content/dam/rand/pubs/research_reports/RRA600/RRA660-7/RAND_RRA660-7.pdf ↩

-

Bonnie, E. (2023, April 6). SOC 2 Type II compliance: definition, requirements, and why you need it. SecureFrame. Retrieved July 30, 2024, from https://secureframe.com/blog/soc-2-type-ii ↩

-

FedRAMP® Vulnerability Scanning Requirements: Version 2.0. (2024). General Services Administration. Retrieved July 30, 2024, from https://www.fedramp.gov/assets/resources/documents/CSP_Vulnerability_Scanning_Requirements.pdf ↩

-

I lied. The _real_ dream scenario is making everything Amazon's problem. But then they put in their Shared Responsibility Matrix that scanning RDS instances for CVEs is your responsibility. You scream "I'm using Aurora! Those MySQL CVEs don't directly map! No scanners recognize this friggin' thing!" at the top of your lungs, and, surprise, nobody cares. You have to report that shit anyway. ↩

-

Typically, when I say "regularly", I mean "at least monthly". ↩

-

ask NodeJS ↩

-

maybe meet somewhere in the middle and pin only to major versions ↩

-

a former employer of mine ↩

-

your move, Terrence Howard ↩

-

I once worked for a company that had a zero-accident safety policy. They took safety seriously with a focus on having zero safety-releated incidents. They knew that accidents happen, but it's a mentality of striving for a thing in all that you do. ↩

-

"640K [of RAM] should be enough for anybody" ↩

-

spoiler: this doesn't guarantee good stewardship ↩

-

he (Randall Munroe) ain't wrong ↩

-

It's funny how incredibily like language dependencies Docker images are. ↩

-

™️ ↩

-

When you live in a Go microservice land, the binaries are generally quite happy to live and run on anything. ↩

-

This reduces the possible attack surface greatly when compared to a "bloated" image. ↩

-

This allows you to rebuild daily and pick up updates in your enviornment. ↩

-

In my experience, devs love using lanuage- and product-dependent images. Many of the popular ones you'd want are there. ↩

-

Critical for some deployments ↩

-

They're getting updates straight from the horse's mouth about as fast as one could ever expect. ↩

-

You do have to pay them if you want access to the back catalog of specific-version images. You'd do this if you have or anticipate compatability issues or simply want to more-tightly control your base image. ↩

-

Of course, your product code could be updated in such a way that the vulnerable path becomes reachable. ↩

-

Gotta get that Shrek reference in ↩

-

CVE-2020-19909 is everything that is wrong with CVEs | Hacker News. (2023, August 25). Retrieved July 31, 2024, from https://news.ycombinator.com/item?id=37267940 ↩