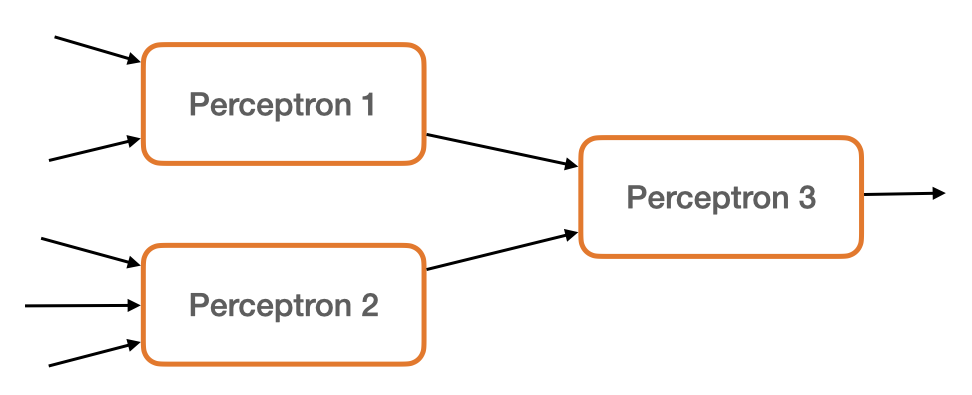

The Perceptron is a model of a single biological neuron. However, our brain doesn't have just one neuron - it has ~86 billion of them! More importantly, our brains neurons are connected together such that the output of one neuron becomes the input to another neuron.

Could we arrange Perceptrons in a similar manner?

Seems feasible, but does this give us some sort of advantage?

Think about this..¶

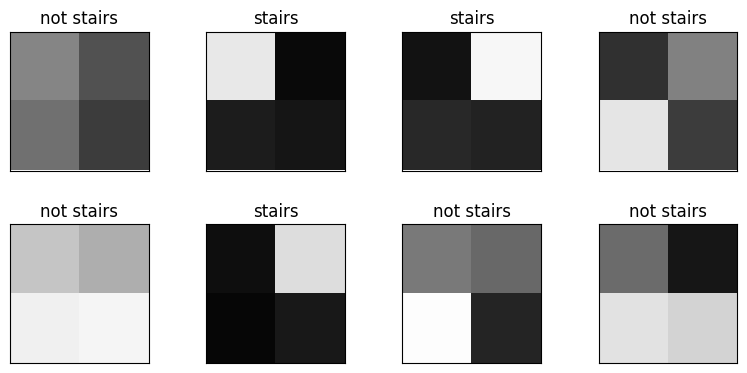

Remember that pesky example that illustrated the Perceptron's difficulty classifying 2x2 depictions of stairs?

Recall, the Perceptron struggles with this task because the Perceptron is a linear model; lightening a pixel can only increase or decrease the model's prediction score.

However, to classify stairs, we clearly need some non-linear logic. Lightening pixel x 1 x_1 x1 should increase or decrease our assertion of stairs depending on the value of x 2 x_2 x2 .

Chaining Perceptrons together gives us exactly the nonlinearity we need to solve tasks like these. (In the subsequent challenge, you're tasks to hand-craft a MLP to classify stairs like these.)

Observe

With enough thought and creativity, perhaps you could've derived Multilayer Perceptrons. But notice how naturally this model appears when you simply attempt to mimmick the structure and behavior of the brain. This is why understanding the brain is so valuable - it's a fantastic north star for developing new approaches to machine vision (and ultimately AGI).